背景

我们说完了chatTTS的介绍以及安装,体验完之后我们发现一个问题,chatTTS无法合成长文本,一般不超过30秒,下面分享一个解决思路,权当抛砖引玉

解决思路

- 文本分割

- 文本清洗

- 循环生成语音

- 语音合成

文本分割

我们可以考虑spacy

安装

pip install spacy

对于中文需要加载一个模型

nlp = spacy.load('zh_core_web_sm')

我们可以在Github上找到该模型

下载地址

浏览器下载慢的话可以试试迅雷,或许有奇效,安装完成后,在命令行中cd到该目录

pip install zh_core_web_sm-3.7.0-py3-none-any.whl

使用

doc = nlp(text)

sentences = [sent.text.replace("n", "") for sent in doc.sents]

for sentence in sentences:

print(sentence)

文本清洗

我们参考ChatTTS项目对项目标点转成只有逗号和句号。并使用停顿来控制

character_map = {

':': ',',

...

'—': ',',

}

motion_map = {

',': '[uv_break]',

'。': '[lbreak]',

}

class TextUtils:

...

def apply_character_map(self):

"""

将字符映射到对应的字符

"""

translation_table = str.maketrans(character_map)

self.out = self.out.translate(translation_table)

def apply_motion_map(self):

"""

将字符映射到对应的动作

"""

translation_table = str.maketrans(motion_map)

self.out = self.out.translate(translation_table)

我们测试一下

if __name__ == "__main__":

tu = TextUtils(file=r"D:\斗破苍穹.txt")

tu.convert_half_width_to_full_width()

tu.apply_character_map()

tu.apply_motion_map()

print(tu.out)

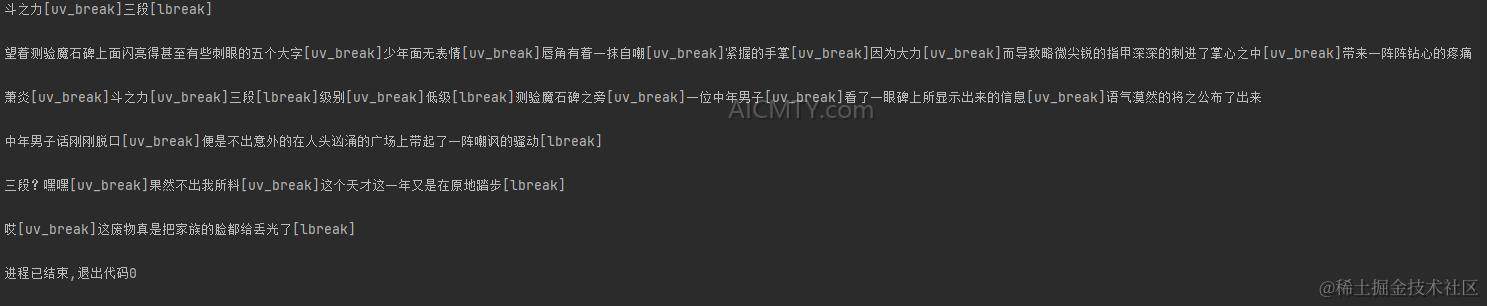

结果如下

我们推荐先将文本清洗完成,然后一行一行读取

循环生成语音

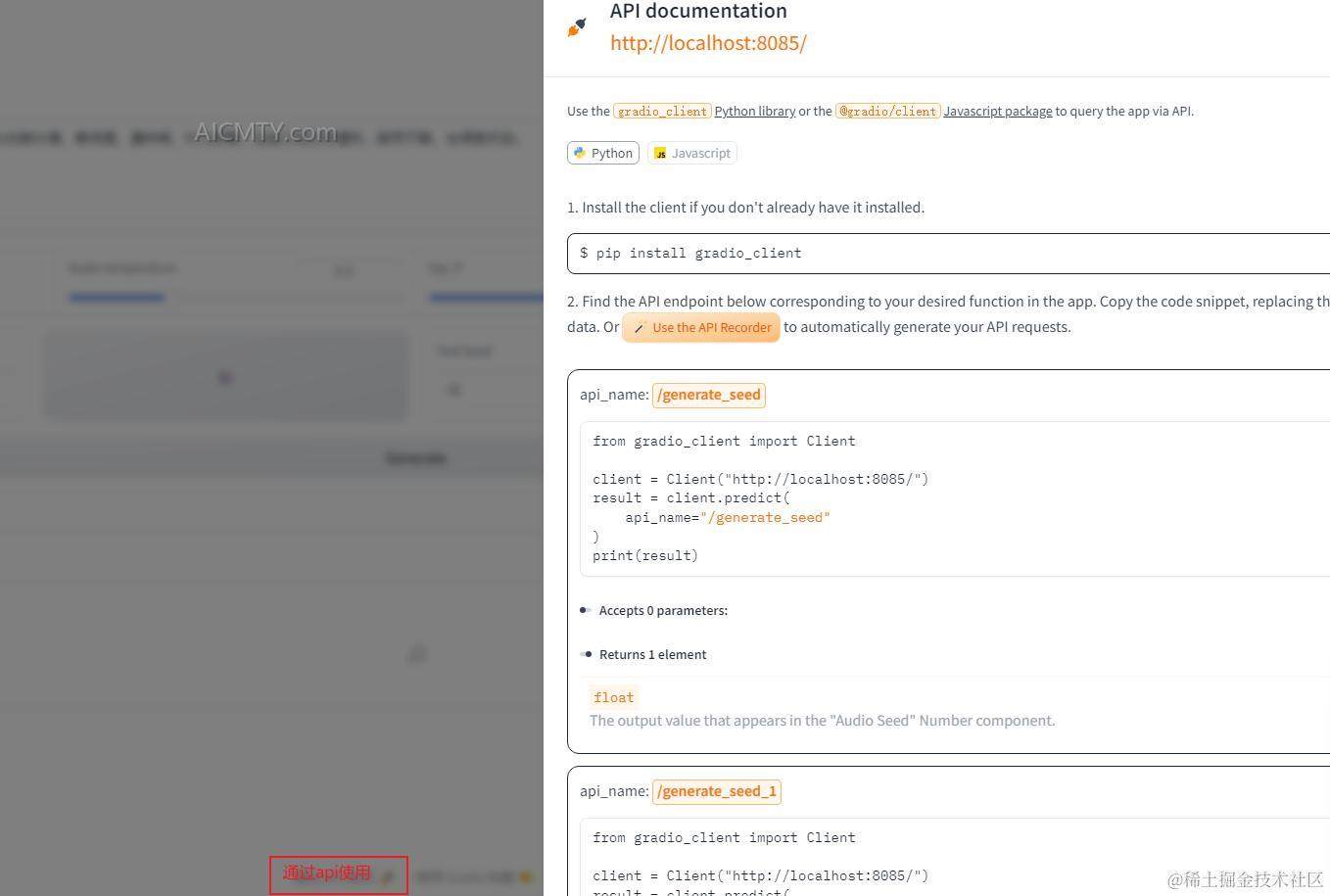

其实就是循环调用gradio的接口

可在项目底部找到说明

我们可将每次生成语音固定存在某个目录

语音合成

方案有很多,我们选择最常见的ffmpeg方案,需要事先将ffmpeg添加到环境变量

...

# 合并音频

cmd = [

"ffmpeg",

"-safe", "0",

"-f", "concat",

"-i", "input.txt",

"-c", "copy", "-y",

f"{source_directory}\output.wav"

]

log.info(" ".join(cmd))

完整代码

text_utils

character_map = {

':': ',',

';': ',',

'!': '。',

'(': ',',

')': ',',

'【': ',',

'】': ',',

'『': ',',

'』': ',',

'「': ',',

'」': ',',

'《': ',',

'》': ',',

'-': ',',

'‘': '',

'“': '',

'’': '',

'”': '',

':': ',',

';': ',',

'!': '.',

'(': ',',

')': ',',

'[': ',',

']': ',',

'>': ',',

'<': ',',

'-': ',',

'…': '',

'—': ',',

}

motion_map = {

',': '[uv_break]',

'。': '[lbreak]',

}

class TextUtils:

def __init__(self,text="",file=""):

if text != "":

self.text = text

else:

log.info("Reading file: " + file)

if file == "":

# 抛出异常

log.error("File not found")

return

with open(file, 'r', encoding='utf-8') as f:

self.text = f.read()

self.out = ""

def convert_half_width_to_full_width(self):

"""

将半角字符转换为全角字符

"""

out = ""

for char in self.text:

if ord(char) == 32:

out += " "

elif 33 <= ord(char) <= 126:

out += chr(ord(char) + 65248)

else:

out += char

self.out = out

def apply_character_map(self):

"""

将字符映射到对应的字符

"""

translation_table = str.maketrans(character_map)

self.out = self.out.translate(translation_table)

def apply_motion_map(self):

"""

将字符映射到对应的动作

"""

translation_table = str.maketrans(motion_map)

self.out = self.out.translate(translation_table)

main

from gradio_client import Client

from simpleaudio import WaveObject

import shutil

import spacy

from pathlib import Path

from datetime import datetime

import subprocess

from text_utils import TextUtils

# 对于中文

# https://github.com/explosion/spacy-models/releases/download/zh_core_web_sm-3.7.0/zh_core_web_sm-3.7.0-py3-none-any.whl

# pip install zh_core_web_sm-3.7.0-py3-none-any.whl

nlp = spacy.load('zh_core_web_sm')

import logging as log

log.basicConfig(level=log.INFO, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s')

# 保存目录

target_home = "D:chattts_audio"

# 服务地址

url = "http://localhost:8080/"

class TTS:

def __init__(self, url):

self.client = Client(url)

def generate(self, text, temperature=0.3, top_P=0.7, top_K=20, audio_seed_input=123456789, text_seed_input=987654321,

refine_text_flag=False):

data = {

"text": text,

"temperature": temperature,

"top_P": top_P,

"top_K": top_K,

"audio_seed_input": audio_seed_input,

"text_seed_input": text_seed_input,

"refine_text_flag": refine_text_flag

}

result = self.client.predict(

api_name="/generate_audio",

**data

)

log.info(result[1])

return result[0]

def play(self, file_name):

wave_obj = WaveObject.from_wave_file(file_name)

play_obj = wave_obj.play()

play_obj.wait_done()

def move(self, file_name, target_name):

parent_path = Path(file_name).parent

shutil.move(file_name, target_name)

# 删除

shutil.rmtree(parent_path)

def gengerate_from_txt(self, txt):

# 读取文本文件

file_name = self.generate(txt)

timestatmp = datetime.now().strftime("%Y%m%d%H%M%S")

self.move(file_name, f"{target_home}/{timestatmp}.wav")

def generate_from_file(self, file_name):

# 读取文本文件

with open(file_name, "r", encoding="utf-8") as f:

text = f.read()

# 获取文件名不带后缀

file_name = Path(file_name).stem

save_dir = f"{target_home}/{file_name}"

Path(save_dir).mkdir(parents=True, exist_ok=True)

doc = nlp(text)

sentences = [sent.text.replace("n", "") for sent in doc.sents]

for sentence in sentences:

# 去除空行

if sentence.strip() == "":

continue

tu = TextUtils(text=sentence)

tu.convert_half_width_to_full_width()

tu.apply_character_map()

tu.apply_motion_map()

log.info(tu.out)

file_name = self.generate(tu.out)

timestatmp = datetime.now().strftime("%Y%m%d%H%M%S")

self.move(file_name, f"{save_dir}/{timestatmp}.wav")

def merge_audios(self, source_directory):

wav_list = Path(source_directory).glob("*.wav")

# 根据时间戳排序

wav_list = sorted(wav_list, key=lambda x: int(x.stem))

# 生成input.txt

with open("input.txt", "w", encoding="utf-8") as f:

for wav in wav_list:

# 路径分割为\

f.write(f"file '{wav.as_posix()}'n")

# 合并音频

cmd = [

"ffmpeg",

"-safe", "0",

"-f", "concat",

"-i", "input.txt",

"-c", "copy", "-y",

f"{source_directory}\output.wav"

]

log.info(" ".join(cmd))

subprocess.run(cmd, check=True)

# 删除

Path("input.txt").unlink()

def main():

tts = TTS(url)

file_path = "D:\斗破苍穹.txt"

tts.generate_from_file(file_path)

tts.merge_audios(str(Path(target_home).joinpath(Path(file_path).stem).resolve()))

if __name__ == "__main__":

main()